Convolutional Neural Networks

Convolutional Neural Networks (CNNs) have become a strong tool in the field of deep learning, changing many fields like computer vision, natural language processing, and robotics. CNNs are especially good for image recognition jobs because they can automatically learn relevant features from raw input data. In this blog, we will delve into the workings of CNNs, exploring their architecture, components, and applications.

What are convolutional neural networks?

Convolutional Neural Networks (CNNs) are a class of deep learning algorithms specifically designed for processing and analyzing visual data, such as images and videos. They are inspired by the human visual system, which is known for its ability to identify patterns and features in the visual input.

CNNs are widely used in computer vision tasks, such as image classification, object detection, segmentation, and image generation. The key idea behind CNNs is to use convolutional layers to automatically learn and extract relevant features from the input data, which are then used for making predictions.

How do convolutional neural networks work?

Convolutional neural networks are distinguished from other neural networks by their superior performance with image, speech, or audio signal inputs. They have three main types of layers, which are:

The convolutional layer is the first layer of a convolutional network. While convolutional layers can be followed by additional convolutional layers or pooling layers, the fully-connected layer is the final layer. With each layer, the CNN increases in its complexity, identifying greater portions of the image. Earlier layers focus on simple features, such as colors and edges. As the image data progresses through the layers of the CNN, it starts to recognize larger elements or shapes of the object until it finally identifies the intended object.

Convolutional layer

A Convolutional Layer is a fundamental building block of Convolutional Neural Networks (CNNs), which are widely used for tasks like image and video recognition, natural language processing, and various other tasks involving grid-like data. The convolutional layer plays a crucial role in extracting features from input data.

The primary purpose of a convolutional layer is to perform convolution operations on the input data. Convolution is a mathematical operation that involves sliding a small filter (also known as a kernel) over the input data and computing the dot product between the filter and the local regions of the input. This process allows the layer to detect patterns and features within the input.

The convolutional layer's key components are:

Filter/Kernel: A small matrix of learnable weights. The size of the kernel typically ranges from 1x1 to 5x5 (commonly used sizes). During training, the neural network learns the optimal values of these kernel weights.

The convolutional layer's key advantages are:

Typically, multiple convolutional layers are stacked together in a CNN, followed by activation functions (e.g., ReLU), pooling layers (to reduce spatial dimensions), and fully connected layers for classification or regression tasks.

CNNs have been instrumental in achieving state-of-the-art performance in various computer vision tasks and have revolutionized the field of deep learning.

Pooling Layer

In Convolutional Neural Networks (CNNs), the Pooling Layer is a critical component used to reduce the spatial dimensions (width and height) of the input feature maps, while retaining essential information. Pooling is typically applied after the convolutional layers and is followed by additional convolutional layers in the CNN architecture.

The primary purpose of the Pooling Layer is to achieve two main goals:

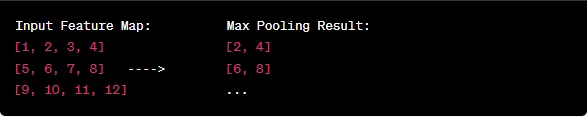

The most common type of Pooling Layer is the Max Pooling Layer, which operates by dividing the input feature map into non-overlapping rectangular regions (typically 2x2 or 3x3) and selecting the maximum value from each region. The selected maximum value represents the most important feature in that region, effectively highlighting the presence of that feature in the input.

Example of 2x2 Max Pooling:

Other types of pooling layers include Average Pooling, which calculates the average value within each region, and Global Average Pooling, which takes the average across the entire feature map.

It's important to note that the Pooling Layer does not introduce any learnable parameters; it is a fixed operation applied during the forward pass. The CNN learns the optimal filters and parameters during the training process in the convolutional layers, and the pooling layer helps in summarizing the learned features efficiently.

Fully-Connected Layer

Fully-Connected Layer (FC layer) is a type of neural network layer that performs a standard feedforward operation. Unlike convolutional layers that are used to extract local features through convolutions, the fully-connected layer is responsible for learning global patterns and relationships from these extracted features.

After passing the input through several convolutional and pooling layers, the data is eventually flattened into a 1-dimensional vector. This flattened vector is then fed into the fully-connected layer. Each neuron in the FC layer is connected to every neuron in the previous layer, which means that the outputs from all the neurons in the previous layer contribute to the computation of each neuron in the FC layer.

Mathematically, the operation of a fully-connected layer can be represented as follows:

Output = Activation(W * Input + b)

where:

The purpose of the fully-connected layer is to learn complex patterns and representations from the extracted features, making it possible for the network to perform tasks such as classification, regression, or any other supervised learning tasks.

It's worth noting that fully-connected layers are typically used in the later stages of CNN architectures, after the initial convolutional and pooling layers have extracted relevant features. As CNNs have evolved, some modern architectures have started to use global average pooling or other techniques in place of fully-connected layers to reduce the number of parameters and enhance model interpretability.

Types of convolutional neural networks

Here are some types of CNNs commonly used in various computer vision tasks:

These are just a few examples, and there are many other CNN architectures, each designed to address specific challenges and tasks in computer vision and image processing. The field of deep learning is continually evolving, and new architectures and improvements are regularly being proposed.